A Basic Guide to AI: The Three-Layer Framework for connecting the dots

Feeling like AI is moving faster than a cat on a Roomba? This three-layer framework breaks it down, making AI easy to grasp—whether you're new or just brushing up.

I get it—I didn’t set out to become an AI enthusiast either. I stumbled into it when I had to build an in-house forecasting model to understand customer demand in 2021 (which, in AI years, feels like forever ago). At first, it was overwhelming—so many moving pieces, so much new information. But over time, I realized something: once you strip away the noise, AI follows a simple structure that anyone can understand.

Since then, I’ve worked with NLP, LLMs, Transformers, and other AI-driven innovations, but one thing has remained true—mastering the basics goes a long way. That’s why I wrote this guide: to give you a simple three-layer framework to make sense of AI driven developments.

Before we dive into the framework, let’s take a step back and clarify the AI landscape—what exactly is AI, and how does it all fit together?

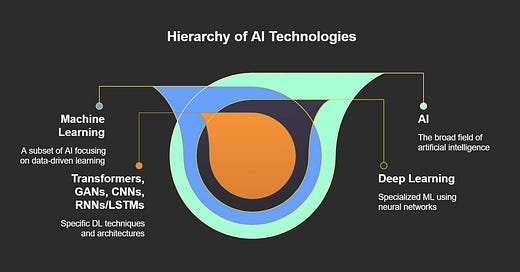

The Relationship Between AI and ML

Artificial Intelligence (AI) is the broad field of creating systems that mimic human intelligence—reasoning, problem-solving, understanding language, and learning from experience. Everything that is machine intelligence is under AI.

Machine Learning (ML) is a subset of AI that enables systems to learn patterns from data instead of being explicitly programmed. (There are non ML parts of AI that we will not go deeper into).

Within machine learning, common techniques include decision trees, clustering, and regression methods. Deep Learning, a specialized subset of ML, uses multi-layered neural networks to tackle highly complex problems, such as language understanding and image recognition.

Transformers are a subset of Deep learning and LLMs are a subset of transformer architecture.

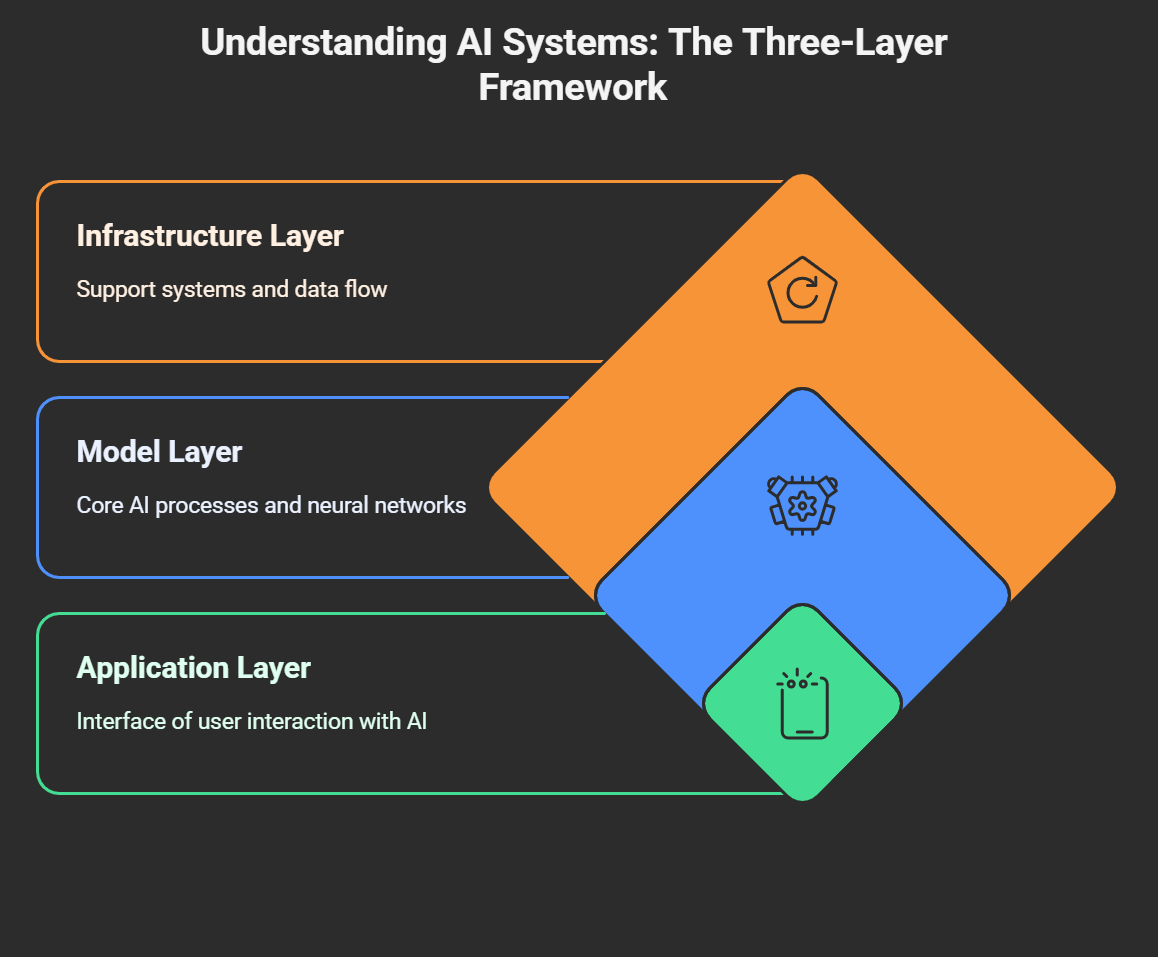

Now that we’ve covered the AI basics, let’s dive into the Three-Layer Framework—a simple way to understand how AI systems are built and operate. We’ll use a smartphone as an analogy to break down the Application, Model, and Infrastructure Layers in a way that’s easy to grasp.

1. Application Layer: The Screen and Apps (What Users Experience)

As an end user, this is what you actually see and touch - like Instagram, Snapchat, or Chatgpt.

IMO- It's the fun part of AI, where the magic happens: users input requests, receive outputs, and engage with the product.

Key to creating successful products for the application layer is very much about the user.

We WANT to create (as builders):

Frictionless, intuitive user experiences that solve clear, high-impact problems.

Speed matters—no long loading screens or complex onboarding. AI interactions should feel instant.

Simplicity and delight—users shouldn’t need technical knowledge to use your product.

2. Model Layer: The Processor and Software (The AI Engine)

Like a smartphone’s internal processor running complex software, this layer contains the AI models performing heavy lifting—generating predictions, recommendations, and insights.

Like a smartphone’s internal processor running complex software, this layer contains AI models performing the heavy lifting—generating predictions, recommendations, and insights.

We WANT (as builders):

Models that meet business needs within resource constraints (time, cost, talent).

Avoid a myopic rush to the fastest, cheapest option without long-term consideration.

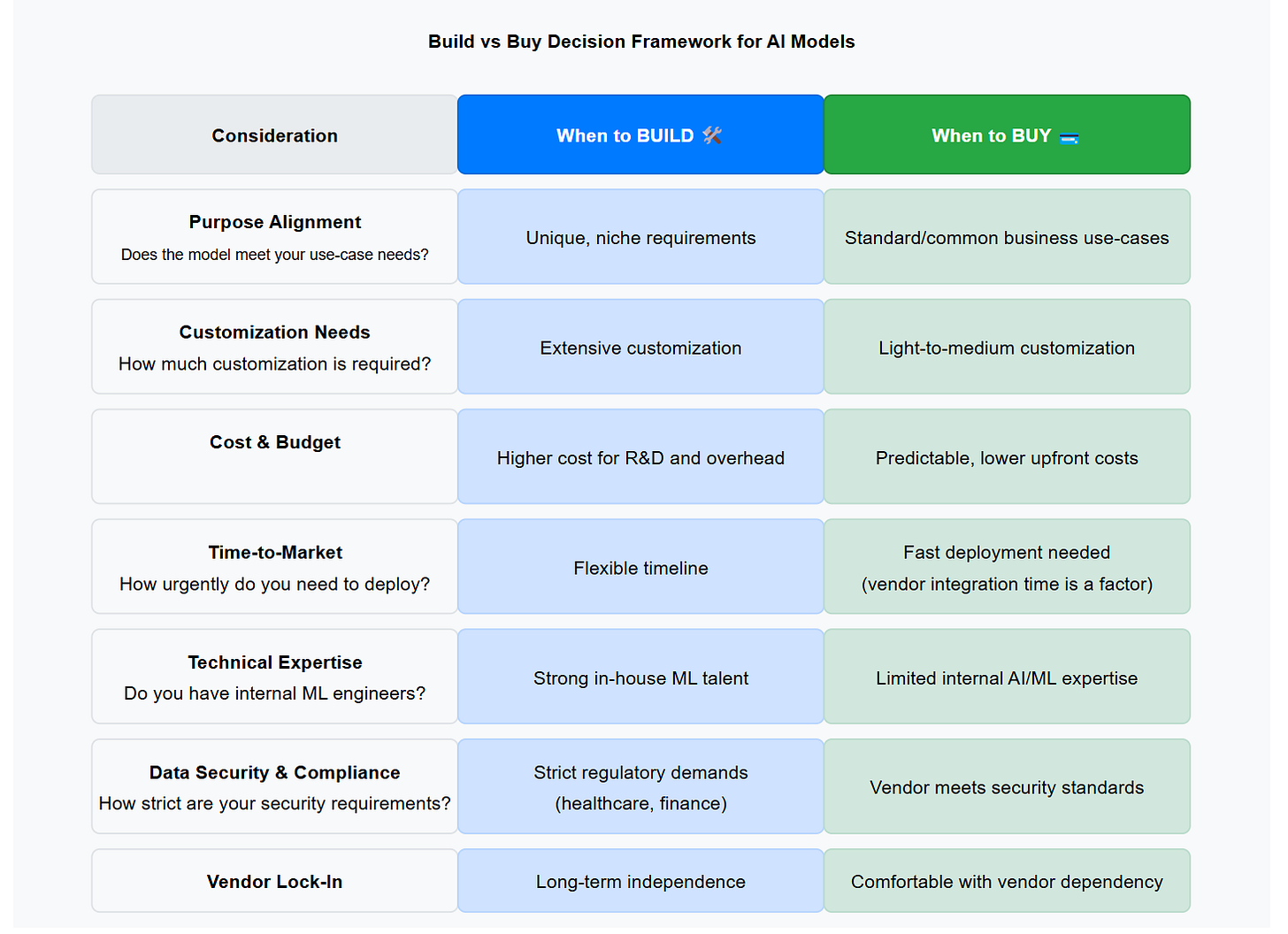

Building models in-house? My 2 cents: Do it ONLY if it gives you a distinct advantage (cost, data control, customization). Otherwise, most tasks can now be handled by vendor-provided models. There are a few things to consider here- so I made a checklist.

Quick checklist to help you guide build vs buy decisions:

Key Reminders for Buy vs. Build Decisions

If you're buying:

All in with one model/vendor (without researching options)

Relying heavily on a single provider introduces risks around data privacy, safety, update frequency, and limited flexibility. Do due diligence upfront to understand your options/risks from different providers.Watch out for hidden operational costs.

Usage-based pricing (e.g., tokens, API calls) can quickly add up, significantly impacting your ongoing operational expenses.

If you're building in-house:

ROI may not be immediate.

Building AI models internally demands substantial upfront investment in infrastructure (GPUs, storage), specialized talent (data scientists, ML engineers), and ongoing maintenance. Returns like competitive advantage, IP ownership, and efficiency gains often take time to materialize, so plan accordingly.

3. Infrastructure Layer: The Hardware and Systems (The Foundation)

Just as cell towers, data centers, and batteries power your smartphone reliably, this foundational layer enables AI models to run smoothly at scale, providing computing power, storage, and operational stability.

This foundational layer is critical—it's what makes AI advancement possible. Companies like NVIDIA, CoreWeave, and Azure thrive here, supplying the GPUs, cloud environments, and computing resources that AI models depend upon.

I will caveat by saying that making decisions at the infra layer are not common for product managers on a day to day basis. You would have to understand (at a high level) what the components are but it’s not something that a company changes often (unlike the application/model layer- where you might be working with different models for different use cases.)

However, in organizations where infrastructure significantly impacts product performance or user experience, product managers may engage more deeply in these decisions.

We WANT (as builders):

Infrastructure that is scalable, reliable, and cost-efficient while ensuring security and compliance.

Scalability & Reliability – Infrastructure should seamlessly scale computing power as demand fluctuates while ensuring fast, uninterrupted performance to support real-time AI interactions.

Cost & Security Balance – Optimize GPU and cloud costs without compromising performance, data protection, or regulatory compliance.

Putting It All Together: The AI Chatbot Example

Imagine you're building an AI-powered customer support chatbot. Here's how the Three-Layer Framework ties it all neatly together:

📱 Application Layer (What Users Experience)

User Interface: A simple, conversational chat window where customers easily type their questions.

Human Escalation Logic: A streamlined workflow to quickly hand over complex queries to human agents, ensuring user trust and satisfaction.

🧠 Model Layer (AI Engine)

Language Model: A Transformer-based Large Language Model (LLM) such as GPT, handling customer interactions and providing accurate responses.

Intent Classification: A lightweight classifier (e.g., logistic regression or small neural network) that quickly identifies the type of user question and routes appropriately.

Monitoring & Feedback Loop: Continuous monitoring and user feedback collection to identify and fix knowledge gaps—enabling fine-tuning and regular model improvements.

⚙️ Infrastructure Layer (Foundation & Stability)

Cloud Computing & GPUs: Cloud-based platforms (AWS, Azure, GCP) supplying scalable computing resources for training and real-time model inference.

Secure Data Storage: Safe storage systems for user conversations and model-training data, fully compliant with privacy regulations.

MLOps & Automation: Automated pipelines (MLOps) to seamlessly deploy, update, and manage AI models, ensuring reliability and rapid iteration.

Optional Read: Quick Breakdown of Common Terms Using the Three-Layer Framework

📱 Application Layer (What Users Experience)

Wrapper – Applications built on top of existing AI models, offering user-friendly interfaces. (Example: Chatbot apps powered by GPT.)

Generative AI (Application-Level) – AI-powered tools that allow users to create new content—text, images, code, music, or videos. (Examples: ChatGPT, Midjourney, DALL·E, )

Agentic AI (Application-Level) – AI assistants and automation tools that autonomously complete tasks, take actions, or interact with external systems.

Prompt Engineering – The craft of designing effective prompts (inputs) that guide AI models toward accurate, useful, and relevant outputs without modifying the model itself.

🧠 Model Layer (The AI Engine)

Transformer & LLM (Large Language Model) – Deep learning models using the Transformer architecture, optimized for processing sequences of data (text, speech, and code). (Examples: GPT-4, Claude, Llama.)

Generative AI (Model-Level) – The core AI models that generate new content rather than just analyzing data. (Examples: Large Language Models (LLMs) for text, Diffusion Models for images.)

Agentic AI (Model-Level) – Advanced AI models capable of multi-step reasoning, planning, memory retention, and interacting with external tools or APIs to execute autonomous tasks. (Examples: AI-powered automation models, LLMs with tool use like ChatGPT Plugins.)

Prompt Engineering (Model-Level) – Crafting precise input instructions to improve a model’s responses, shaping behavior without retraining.

Weights & Biases – "Weights" are parameters learned during training, while "biases" are unintended patterns that can lead to inaccuracies or fairness issues.

Fine-Tuning – Adjusting a pre-trained model’s parameters on domain-specific data to improve accuracy and relevance for specialized tasks.

⚙️ Infrastructure Layer (Foundation & Stability)

Data Mining – Extracting and processing large datasets to discover insights, which are used for AI model training.

Compute and GPUs – High-performance processors (like GPUs and TPUs) that handle the complex computations required for training and running deep learning models.

🔗 Cross-Layer Concepts (Spanning Multiple Layers)

Bias & Hallucinations (Ethical Implications) – AI models can generate incorrect, misleading, or biased outputs, requiring monitoring across all layers.

Final Thoughts

AI can feel overwhelming, but clarity starts with structure. The Three-Layer Framework—Application, Model, and Infrastructure—acts as a mental model to simplify complex AI decisions. Hope this helps you in your learning journey!